How the context window impacts Large Language Models (LLM).

- Dr. David Swanagon

- Aug 14, 2025

- 2 min read

Updated: Dec 31, 2025

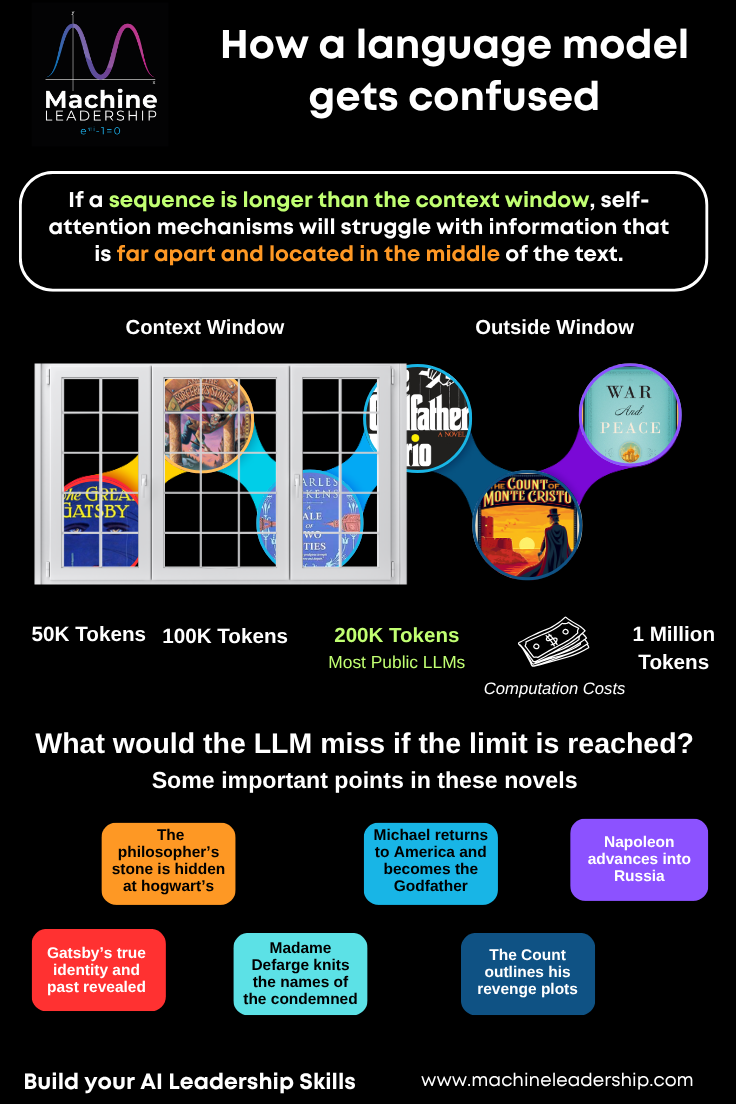

Large Language Models (LLM) that utilize self attention mechanisms are challenged by the context window. When the sequence becomes too long, models can make mistakes with tokens that are located far apart and in the middle of the text. The trick is finding the right balance between cost and decision requirements.

Check out what would be missed if some of the world's greatest novels were untrained. No one would know Michael became the Godfather.

A key aspect of AI leadership is balancing data consumption with computational costs and process complexity. LLMs need a broad context window to interpret text. However, this goal must be balanced with financial realities associated with 1M+ tokens.

An interesting extension to this issue are copyright protections. Right now, language models are allowed to train on copyrighted materials under the "Fair Use" provision. This essentially allows GPT and its competitors to scale without having to pay for data access. This is true even if the Non-Fungible Tokens have a digital certificate of ownership. The copyright is only infringed if the LLM reproduces output that is consistent with the copyright.

Why does this matter?

Claude recently announced that it reached the 1M token threshold for its context window. GPT, Gemini, and Llama have similar models. If these companies are allowed to train on copyrighted material without seeking permission or licensing agreements, the"fair use" provision will essentially eliminate the copyright protections. How could an individual copyright owner utilize their works if the LLM is the main vehicle for society's content delivery?

Another risk is that the language models begin interpreting the copyright in a manner that avoids infringement. Since millions of people rely on LLMs for information, the meaning behind published works could "change" simply to avoid legal challenges. In other words, the language model would create output that reimagines the meaning of the research or innovation to avoid lawsuits. Over time, the LLMs meaning becomes the "actual" de facto meaning.

The pace of AI innovation is allowing technology firms to design models that pursue this very path, while our regulators lack the skills to provide meaningful constraints on the way LLMs capture, train, and utilize data. Collective licenses are a potential pathway. The risk is that large firms (i.e., banks) purchase the collective licenses over time similar to M&A. If this happens, then the companies that provide capital will also have indirect ownership over the data.

The best solution is for individuals to develop the skills needed to understand their data rights, pursue digital licensing agreements that protect their innovations and control how LLMs train on data. As the economists say, "There is no such thing as a free lunch". This motto should be applied to tech companies and their LLMs.

Comments